Table Of Contents

What is Linear Regression?

Linear regression is a predictive analysis algorithm. It is a statistical method that determines the correlation between dependent and independent variables. This type of distribution forms a line and hence called a linear regression. It is one of the most common types of predictive analysis.

It is used to predict the dependent variable's value when the independent variable is known. A regression graph is a scatterplot that depicts the arrangement of a dataset; x and y are the variables. The nearest data points that represent a linear slope form the regression line. Thus, plotting and analyzing a regression line on a regression graph is called linear regression.

Key Takeaways

- Linear regression is a statistical model that is used for determining the intensity of the relationship between two or more variables. One dependent variable relies on changes occurring in independent variables.

- They are classified into two subtypes—simple and multiple regression.

- Regression validity depends on assumptions like linearity, homoscedasticity, normality, multicollinearity, and independence.

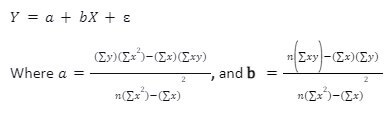

- In the formula, 'Y' is the dependent or outcome variable, 'a' is the y-intercept, 'b' is the regression line's slope, and 'ɛ' is the error term: Y = a + bX + ε

Linear Regression Explained

Linear regression is a model that defines a relationship between a dependent variable 'y' and an independent variable 'x.' This phenomenon is widely applied in machine learning and statistics.

It is applied to scenarios where the variation in the value of one particular variable significantly relies on the change in the value of a second variable. Here, the dependent variable is also called the output variable. The dependent variable varies depending on the change in the independent variable.

It is classified into two types:

- Simple Linear Regression: It is a regression model that represents a correlation in the form of an equation. Here the dependent variable, y, is a function of the independent variable, x. It is denoted as Y = a + bX + ε, where 'a' is the y-intercept, b is the slope of the regression line, and ε is the error.

- Multiple Linear Regression: It is a form of regression analysis, where the change in the dependent variable depends upon the variation in two or more correlated independent variables.

In practical scenarios, it is not always possible to attribute the change in an event, object, factor, or variable to a single independent variable. Rather, changes to the dependent variable result from the impact of various factors—linked to each other in some way. Thus, multiple regression analysis plays a crucial role in real-world applications.

Before choosing, researchers need to check the dependent and independent variables. This model is suitable only if the relationship between variables is linear. Sometimes it is not the best fit for real-world problems. For example, age and wages do not have a linear relation. Most of the time, wages increase with age. However, after retirement, age increases but wages decrease.

Linear Regression Formula

A dependent variable is said to be a function of the independent variable; represented by the following linear regression equation:

Here, 'Y' is the dependent or outcome variable;

- 'a' is the y-intercept;

- 'b' is the slope of the regression line;

- 'X' is the independent or exogenous variable; and

- 'ɛ' is the error term; if any

Note – The above formula is used for computing simple linear regression.

Calculation

Linear regression is computed in three steps when the values of x and y variables are known:

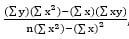

- First, determine the values of formula components a and b, i.e., Σx, Σy, Σxy, and Σx2. Then, make a chart tabulating the values of x, y, xy, and x2.

- Then the values derived in the above chart are substituted into the following formula:

a= , and b=

, and b=

- Finally, place the values of a and b in the formula Y = a + bX + ɛ to figure out the linear relationship between x and y variables.

To better understand calculations, take a look at the Linear regression Examples

Assumptions

The analyst needs to consider the following assumptions before applying the linear regression model to any problem:

- Linearity: There should be a linear pattern of relationship between the dependent and the independent variables. It can be depicted with the help of a scatterplot for x and y variables.

- Homoscedasticity: The variance or residual between the dependent and independent variables should also be equal throughout the regression line— irrespective of x and y values. The analysts can make a fitted value Vs. residual plot to test this assumption.

- Normality: The normal distribution of x and y values is crucial. The residuals should be multivariate normal, and it can be determined by creating a Q-Q plot or histogram.

- Independence: In such an analysis, the observations should have no auto-correlation. To provide fair results, consecutive residuals should be independent of each another. To validate this assumption, analysts use the Durbin Watson test.

- No Multicollinearity: Excessive correlation between independent variables can mislead the analysis. Therefore, data shouldn't be multicollinear. To avoid this issue, variables with high variance inflation (one variable significantly influences another) should be eliminated.