Table Of Contents

What Is Backpropagation?

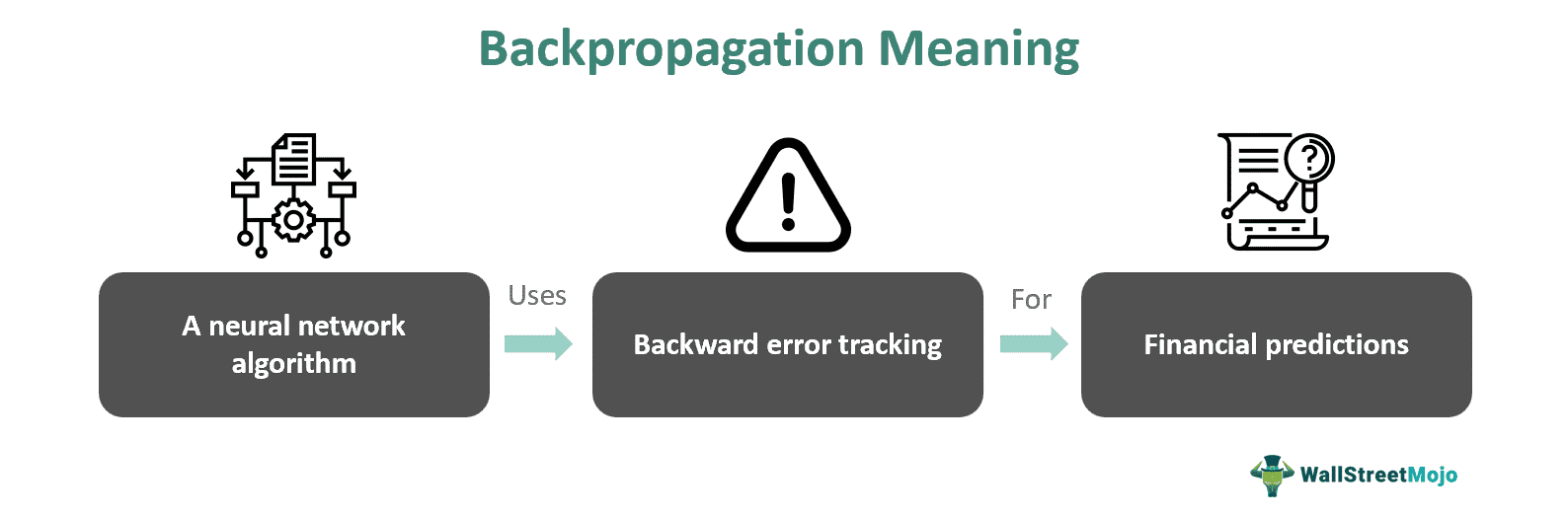

Backpropagation refers to an algorithm in a neural network that discerns the errors in the reverse direction, i.e., starting from the output nodes and finally reaching the input nodes. It is an efficient tool to ensure the accuracy of financial and stock market predictions.

Financial institutions often use this technique to make predictive models to facilitate trading decisions based on the financial records and historical data of the company. It facilitates the forecasting of stock market risks, returns, and ratings. Thus, this algorithm figures out the amount of loss or error incurred at each node to adjust the weights to minimize losses.

Key Takeaways

- Backpropagation is a finance-specific artificial neural network algorithm that adjusts weights using backward error tracking to handle non-linear and time-series data.

- It uses historical data and the company's financial records to depict the potential trend or pattern in financial markets.

- It is a widely used modern technique in the capital markets for analysts, investors, and traders to devise stock market strategies to mitigate risk and maximize returns.

Backpropagation In Finance Explained

Backpropagation in finance is an artificial neural network algorithm capable of handling non-linear and time-series data by adjusting weights through backward error tracking. The researchers have proposed different variations, such as hybrid models integrating technical indicators and optimization algorithms, to enhance prediction accuracy. Such techniques have shown promising results in forecasting stock prices and foreign exchange rates. Moreover, these methods are considered reliable as they are based upon multiple factors, including market indicators, technical analysis, and fundamental company data, to improve prediction performance.

The capital market comprises various financial intermediaries, like commercial banks. The system functions on the circulation of securities, like investing or trading in assets, such as stocks, that engage people in economic activities. Therefore, predicting stock values accurately is crucial to keeping investors' confidence in the market up. The backpropagation algorithm is often employed by analysts, investors, and traders for stock price prediction, with the aim of minimizing errors. It helps them adopt effective strategies for trading or investing to avoid risk and increase profitability.

Many traders use Saxo Bank International to research and invest in stocks across different markets. Its features like SAXO Stocks offer access to a wide range of global equities for investors.

Examples

Backpropagation in neural networks is a complex technique that provides efficient outcomes in stock market predictions. Some examples signifying its practical implications are as follows:

Example #1

Let's consider a simplified scenario for predicting stock prices using the backpropagation algorithm.

Suppose, to find out the next day's potential price of stock A, we use the following data as Inputs:

- Historical stock prices

- Volume of trades

- Moving averages

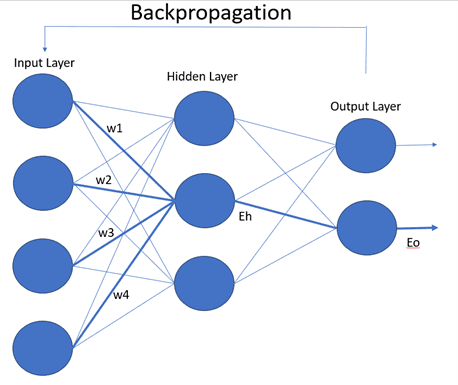

When we find out the output price of stock A, then we can calculate the error for each hidden unit between the inputs and outputs to gauge the accuracy of the model. Accordingly, we can update the weights of each output in the network. Given below is the pictorial representation of this technique:

While moving from the error at the output (Eo) to the error at the hidden layer (Eh), we adjusted the weights, w1, w2, w3, and w4, to ensure predictive accuracy.

Example #2

An article mentioning the discerning errors in the neural network that depicts the stock price of Apple the following considerations were made:

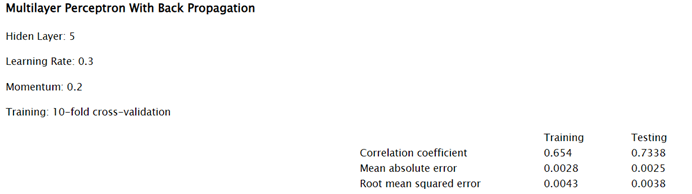

The researcher considered 5 different hidden layers to conduct the training 10-fold cross-validation analysis for studying the performance of the company in the reverse direction and constructing a suitable model for predicting its future stock prices.

Advantages and Disadvantages

Backpropagation in neural networks is done through a machine learning algorithm; it is widely used in the stock market and financial predictions due to its accuracy in error measurement. Given below are the various benefits and limitations of this technique in finance:

Advantages:

- Predictive Accuracy: Backpropagation is known for its precision in forecasting future stock prices and foreign exchange rates.

- Based on Historical Data: The technique uses the company's past stock price data and financial records to determine future price patterns.

- Backs Trading Strategies: It recognizes the stock price patterns accurately to help investors, traders, and analysts make informed investment choices.

- Allows Weight Adjustment: It ascertains the reverse analysis of loss associated with each node, and thus, adjusting the weights accordingly it ensures risk mitigation.

- Complex Relationship Modeling: Backpropagation is better than the traditional methods of stock prediction due to its efficiency in modeling complex and diverse financial variables.

Disadvantages:

- Data Dependency: The outcome of Backpropagation significantly depends upon the quality of past data available since biased or inaccurate data can hinder the predictability of the model.

- Overfitting or Underfitting: There exists a susceptibility that the analysts predict too much or too little of the training data while not generalizing the results.

- Computational Complexity: It requires too much calculation, which is not only time-consuming and expensive but also difficult for a novice to adopt this algorithm in practical analysis.

- Prone to Human Errors: When devising the neural network and performing backpropagation, there is a chance of manual, mathematical, and implementation errors.

Backpropagation vs. Forward Propagation vs. Gradient Descent

Backpropagation, forward propagation, and gradient descent are the three different neural network algorithms that find different applications in the actual scenario due to their dissimilarities, as discussed below:

| Basis | Backpropagation | Forward Propagation | Gradient Descent |

|---|---|---|---|

| Definition | It is an algorithm where the gradient of error associated with each weight is analyzed backward from the output to input layers in a neural network model while updating the weights for error minimization. | It is a training process that moves from the input to output nodes in a neural network to sum the weights at each neuron for deriving the final result. | It is an optimization algorithm for limiting the loss function during neural network training by adjusting the weights iteratively in the opposing direction of the gradient of loss for each weight. |

| Purpose | It evaluates the gradient of loss related to each weight. | It aims at predicting future stock prices without adjusting the weights. | It helps to determine the optimal set of weights that ensure minimum losses or errors. |

| Use | Neural network training, weight updation, and loss or error minimization | Neural network training and inferential phases. | A general optimization algorithm used for neural network training and machine learning. |

Disclosure: This article contains affiliate links. If you sign up through these links, we may earn a small commission at no extra cost to you.