Table Of Contents

Key Takeaways

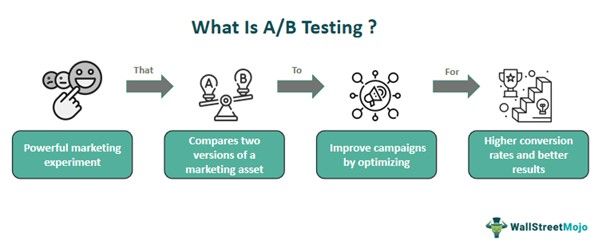

- A/B testing is a potent marketing experiment that involves comparing two variations of a marketing asset to determine the superior performer, aiding marketers in making informed choices about marketing assets such as web pages.

- It involves defining the objective, identifying the variable, creating variations, splitting the audience, implementing tracking, running the test, etc.

- It offers businesses data-driven real-world examples for informed decisions, but it only allows for comparison of two asset versions.

- Digital experiences are enhanced by methods like A/B, canary, and multivariate testing, comparing assets, gradual changes, and multiple element variations for optimization, respectively.

A/B Testing Definition

A/B Testing refers to a powerful marketing experiment for comparing two forms of a marketing asset to ascertain which performed better. It helps marketers gather insights and data to decide on choosing the right marketing assets to build better relations with customers and foster sales.

The marketing industry uses it to compare the performance of two different marketing assets based on elements like improved user engagement, higher click-through rates, and increased conversions. It randomly assigns participants to multiple versions to get unbiased outcomes and valuable insights into user behavior and preferences. It aids in improving marketing campaigns to achieve better conversion.

A/B Testing In Marketing Explained

A/B testing is defined as a controlled experiment involving two versions of a marketing asset getting tested simultaneously to determine better business driving metrics, impacting visitors the most. It has two sets- the current version or the controlled version and the variation having more than one change. First of all, it defines a clear objective, and then marketers choose any element of the variation to test the performance.

Then, these two versions are exposed to different portions of the audience to identify and measure the effective version that can achieve the objective. It is done by deploying a tracking mechanism for metrics measurement and performance determination up to a specific period. Finally, the results are fed into statistical tools and techniques for drawing conclusions and selecting the winning variation.

It is the most accessible and versatile marketing tool that any business size can apply. It helps firms achieve a higher conversion rate of visitors into customers and enhances their marketing efforts. It has wide use in direct mailers, advertisements, landing pages, websites, and email campaigns. Marketers use it to test various aspects of their marketing campaign, like promotional offers, design, messaging, and pricing strategies.

It has numerous implications, like offering knowledge of user behavior and preferences to determine the practical element of a marketing campaign. Hence, they are able to optimize ad campaigns and augment performance holistically using systematic testing of different versions of marketing assets. As a result, it has been used in different industries like e-commerce, digital marketing, and SaaS for marketing.

A firm can quickly increase its conversion rates plus revenue by optimizing its marketing campaigns through A&B testing. Consequently, firms improve their shareholder value and increase their profitability. Financial institutions use it for customer segmentation, user experience designing, and website optimization.

How To Do?

When it comes to optimizing digital presence, having the right A/B testing tools is crucial. Whether running it on A/B testing platforms or for marketing campaigns, using a reliable A/B testing calculator can ensure accurate results. Hence, A/B testing is an essential strategy for refining the website, marketing efforts, and overall online performance. Therefore, let us know the steps to follow to conduct it:

- Defining the objective: First, define the goal and determine what one wants to achieve, like enhancing conversion through the test.

- Identifying the variable: Select the element of a website or app, like layout or button, to be tested.

- Creating variations: Then, create two versions, A & B, of the selected element.

- Splitting the audience: Now, segregate the audience into two groups randomly to test the two versions of the element created earlier.

- Implementing tracking: Use analytical tools like Google Analytics to track the performance of each version.

- Running the test: Start the test of A & B, ensuring the two group has the correct exposure to their assigned variation.

- Monitoring the test: The test must be monitored up to the pre-decided period for collecting enough data for collection.

- Analyzing results: Use statistical analysis to contrast the performance of the chosen elements to determine which version performed better per predefined objective.

- Concluding: Based on the results, a proper conclusion must be drawn about which version met the goal of the test and which failed.

- Implementing changes: Finally, apply the better-performing version to the app or website.

The above process and conclusion must be used for creating future strategies for ad campaigns to garner more customer attention and more significant conversions.

Examples

Let us use a few examples to understand the topic.

Example #1

Spotify, the music streaming platform, has introduced Confidence, a tool for software development teams. This tool empowers teams to efficiently set up, run, coordinate, and analyze user tests, including A/B testing and more complex scenarios. Currently, Confidence is in a private beta phase, offering access to a select group of users.

In terms of A/B testing, Spotify's blog post underscores the wealth of experience of its scientists and engineers in perfecting product testing methods over the years. It includes conducting simultaneous A/B tests and deploying AI recommendation systems across various platforms, such as the Spotify web player, to deliver a seamless user experience.

Confidence offers three real-world access points: a managed service, a backstage plugin, and API integration. Users eager to embrace Confidence can join a waitlist, though a specific product release date remains undisclosed, mirroring the anticipation that often surrounds such A/B testing tools.

Example #2

In a hypothetical scenario, Sarah Anderson, a Senior Product Designer at EveryTech, a fictional tech company specializing in e-commerce platforms, scrutinized session records. She detected an unusual spike in frustrated clicks on the Checkout Now button during online shopping.

After reviewing numerous sessions, Sarah concluded that the existing design, with two conflicting call-to-action (CTA) buttons on one page, needed to be more transparent to users and increase overall satisfaction. EveryTech decided to revamp the checkout process, splitting it into two pages, each with a single CTA button.

The new design underwent A/B testing marketing alongside the old one, using EveryTech's tools and integrations. Surprisingly, conversions increased by 26.5%. Sarah's skillful streamlining of the checkout process, powered by EveryTech's specialized capabilities and data-driven insights derived from the A/B testing, was instrumental in this remarkable success.

This hypothetical example demonstrates how A/B testing and data analysis can lead to a user-friendly e-commerce platform, boosting satisfaction and performance.

Pros And Cons

Pros

- Businesses can make informed decisions based on data-driven real-world examples.

- Facilitates the enhancement of user experience by testing two versions of A & B marketing campaigns of the same product.

- It leads to higher conversion of campaigns through optimized elements like layouts, call-to-action buttons, and headlines.

- It saves the cost of businesses by giving room to improve the ads in steps instead of a one-time huge investment without gains.

- Fosters a trait of regular improvement by promoting iterative modifications per data analysis.

- Using A/B testing tools, it is very easily implemented.

Cons

- Only two versions of an asset can be compared using it.

- It needs substantial sample data to lead to significant statistical performance.

- Interactions amongst similar elements or within their group are left out of its comparison.

- Sometimes, it produces misleading results out of seasonal effects or random fluctuations.

- It is time-consuming and resource-intensive for its setup and result analysis.

A/B Testing vs Canary Testing vs Multivariate Testing

Digital experiences may be optimized using a variety of techniques, including A/B testing, canary testing, and multivariate testing. These testing methods are contrasted in the following table:

| Basis | A/B Testing | Canary Testing | Multivariate Testing |

|---|---|---|---|

| Testing Method | It contrasts two versions of assets like webpages or apps. | It is the slow rollout of changes within a subset. | Multiple versions of elements are tested here. |

| Objective | It helps to choose the better version of the asset. | Assists in assessing user's impact while gathering feedback | The best combination of multiple elements is chosen. |

| Number of Versions Compared | Only two versions can be compared. | It centers around gradual implementation and checking user feedback. | Many versions of elements get tested. |

| Determining Significance | Statistical significance is its base for determining significant results. | Key metrics monitoring and use feedback are analyzed for performance. | For analyzing complex interactions, it needs advanced statistical analysis techniques. |

| Data Tracking and Analysis | Can track and analyze data. | Can gradually track and monitor data. | It needs considerable resources because of its complexity. |

| Resource and Infrastructure | It requires tools and technical resources for analysis. | Must provide the necessary infrastructure. | Multiple variations get tested here. |